Of what use would a model of artificial intelligence for service managers be? I will use this model as a framework for discussing artificial intelligence’s use in the delivery and management of services. This article is the first in a series that will explore AI from this service management perspective. The purpose of these articles is to help service managers wield AI in useful ways. Too often, we let our tools dictate to us how we should act, rather than exploiting our tools to solve our particular problems.

The technology we use to deliver services is increasingly making use of artificial intelligence and machine learning (ML). It has become fashionable for our service management tools to proclaim their artificial intelligence. As service managers, we should not treat AI and ML as black boxes, as mysterious technologies that somehow help us achieve our goals. It behooves use to understand what artificial intelligence and machine learning are really doing, what are their limits and how they compare with human intelligence and human learning.

What is Artificial Intelligence?

I consider artificial intelligence to be a non-biological, non-natural system1 that simulates human thought. Intelligence may be viewed from the perspective of what it is able to achieve and from the perspective of how it achieves results. Thus, we think especially of high performance in coming up with useful answers. We think of the ability to process data autonomously without being explicitly instructed how to perform that processing. Too, we parochial humans marvel at anything other than ourselves that is capable of intelligence.

What we consider to be “intelligent” is regularly evolving. There is probably a relation between how much we understand about how the human brain works and what we consider to be artificial intelligence. The electronic digital computers of the 1950s were thought to be surprising intelligent, when viewed from the perspective of what a “dumb” machine could achieve. The algorithms used in the 1980s and 1990s to achieve “intelligence” could defeat human opponents in certain cases, but they were exceedingly difficult to maintain and their learning quickly reached barriers.

The application of statistical learning techniques to multi-layered artificial neural networks redefined our concept of artificial intelligence. This current concept of artificial intelligence is likely to be replaced in the future, when artificial systems may achieve the ability to define their own purposes and provide interactions with humans that are indistinguishable from human emotions.

In the following discussion, I will use “artificial intelligence” to refer to the general domain of designing, building, deploying, using and maintaining these intelligent systems. I will use “an artificial intelligence” to refer to a particular artificially intelligent system with a particular defined purpose.

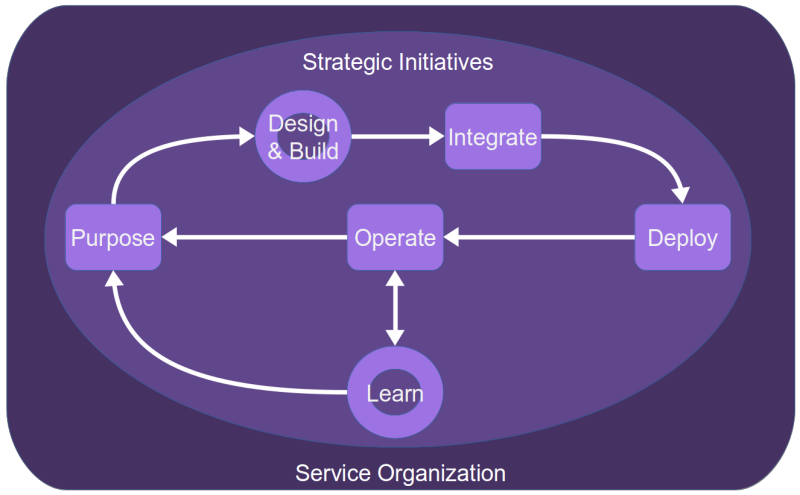

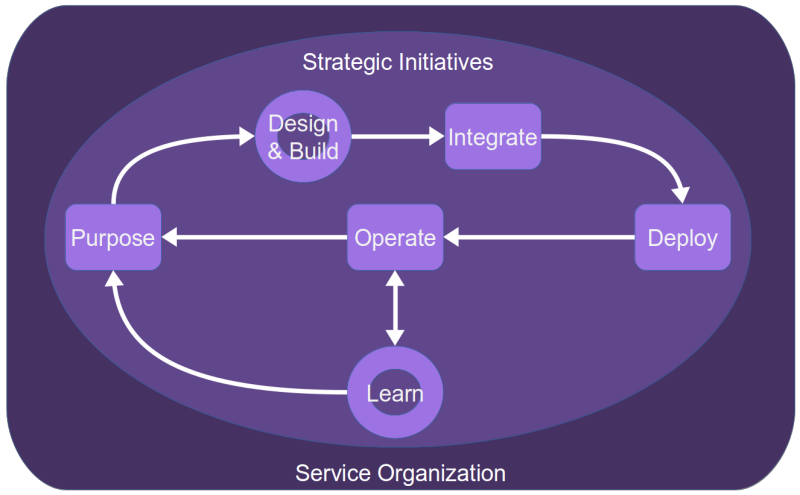

A Model for the Life of an Artificial Intelligence

Since an artificial intelligence is used as a component in a service system, it is subject to the overall developmental phases of that service system. However, there are activities specific to artificial intelligence and machine learning that merit highlighting. These phases will become important to understand, especially when I will review in future articles the role of the service manager in artificial intelligence.

The Service Organization

Artificial intelligence is used by an organization to deliver or to manage services. This includes almost all economic activities. For those of you who think the delivery of services is something different from the manufacture of goods, recall simply that the act of assembling or transforming a raw material to create a good is simply another type of service.

The service organization is an organization conceived and structured to perform the services that fulfill its mission. The mission of an organization defines its overall purpose. This purpose frames, in turn, the purposes of the components of the service system managed by the organization. Artificial intelligences, like human agents, are examples of these components.

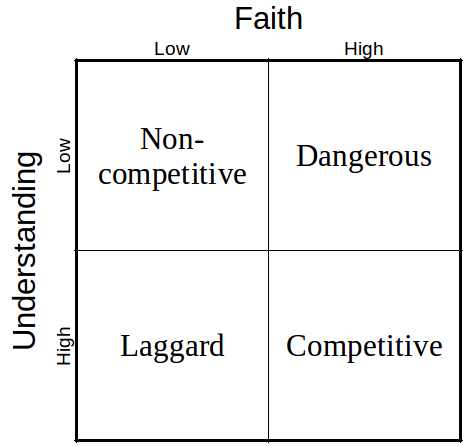

The service organization executes its mission according to its culture and values. Among the values of an organization is the degree to which it has faith in, or is skeptical of, the usefulness of artificial intelligence.

Believing that AI is a useful tool without understanding AI is dangerous. Consider the case of a police department that uses AI to predict criminality, without taking into account the biases of the training data. Or consider the telecommunications company that builds out its infrastructure based on AI algorithms that embed prejudice against certain communities. This prevents a fair and equal access to the Internet for all communities. In this way, the culture of an organization—what it takes for granted—has a strong impact on how it uses AI and the damages and benefits that that use may incur.

Strategies for AI Service Components

As a service organization evolves in its market spaces, its strategies for fulfilling its mission will evolve. Those strategies impact the use of AI in many ways. As we shall see later, the assembling of data for modeling and training may be a costly and time-consuming activity. So it is for the training of an AI, especially when supervised learning is used. Strategies directly influence the availability of resources to model and to train, as well as the timeliness that AI solutions should respect.

For example, a so-called “supervised learning” approach to pattern recognition requires that an AI be trained, generally with a large amount of data that has been labeled with its appropriate meaning. If, using supervised learning, you train an AI to distinguish pictures of cats from pictures of dogs, you train it with a large number of pictures, each of which has previously been labeled as a picture of a cat or a dog.

Suppose you want to use AI to help predict the impact of service system changes on service performance and availability. You might train the AI by feeding it with a large number of state transitions in your system, each labeled according to its impacts on the system. I think you can see that such training requires a huge up-front investment. The data for such training is not always readily available. It may be difficult to find the expert resources needed to reliably label the training data. Indeed, even experts might not understand how certain state changes influence system reliability.

In addition to the allocation of resources and portfolio planning, strategic initiatives also influence AI in terms of the policies governing the tools and third party resources to be used. Suppose your organization plans a variety of services that depend on natural language processing (NLP). Using an intelligent chatbot to enhance customer service is an increasingly common example of the use of NLP. What is your organization’s strategy for providing that NLP? Will you outsource it to a third party or attempt to fulfill it in-house? Will you privilege a single provider, thereby benefiting from the synergies in training the system on your organization’s jargon, or do you find the risk of depending on a single provider to be too great?

Similarly, policies may be established regarding the frameworks and the tools your organization uses to develop AI solutions. Do you want to standardize on coding AIs with Python? Will you privilege a single framework, such as TensorFlow or Keras?

The Purpose of an AI

The purpose of a particular artificial intelligence must be defined. Although AI is supposedly modeled on human brains, there is currently a radical difference between an AI’s purpose and human teleology. In the spirit of the Universal Declaration of Human Rights, human babies are born without any particular purpose. Each baby may develop in a different direction and fulfill many different purposes throughout its life, usually in a simultaneous way.

Artificial intelligences are not (yet) general purpose intelligences. They must be created with a very specific purpose in mind. Once modeled to fulfill that purpose and trained with appropriate data, today’s AIs are useless for any other purpose. Consequently, the definition of purpose, within the context of the organization’s mission and according to its strategies, is primordial.

Consider a chatbot whose purpose is to support customers making service requests or having problems to resolve. For that chatbot to be effective, it must be trained using the particular goods and services provided by the organization. The same chatbot would be largely worthless if used for an organization in a different sector. But even within the same sector, chatbots are hardly general in purpose. Each organization has its own terminology, its own commercial values, its own image and branding, reflected in the language used in commercial discourse. Similarly, each organization has its own problems to resolve and its own ways of resolving those problems.

The purpose of an AI shapes all other aspects of its life.

Designing and Building an AI

The input to the design and build phase are the purpose of the AI, the policies and strategies that govern the use of AI and the various technical and service management personnel involved in the phase. The output of the phase is a service system component that can be integrated into the broader service system.

The vast majority of discussions about AI concern how an AI is designed and built. I do not intend to repeat or even summarize here this information. In addition to being a highly specialized activity, it is in rapid evolution. New AI design techniques are regularly being invented. Early AI design generally resulted in a rule-based solution, one that quickly showed its limits. Today, we are in a period characterized by so-called “deep learning” using multi-layered, artificial neural networks. While a huge advance over rule-based algorithms, some are already starting to speak of it reaching its limits. What will replace deep learning? I have no idea and refer you to the pundits that write about such topics.

Nevertheless, there are certain aspects of AI design and building that are more generic and merit a very brief mention. In particular, the designing and building of an AI requires that some data be assembled and processed; that data must be modeled mathematically; and some form of initial training of the model, tuning its parameters, must be done. Throughout these activities there are various types of tests performed to ensure the appropriateness of the design and the quality of the results.

In later articles I will return to this phase discussing, in particular, the role of the service manager during the design and build of an AI.

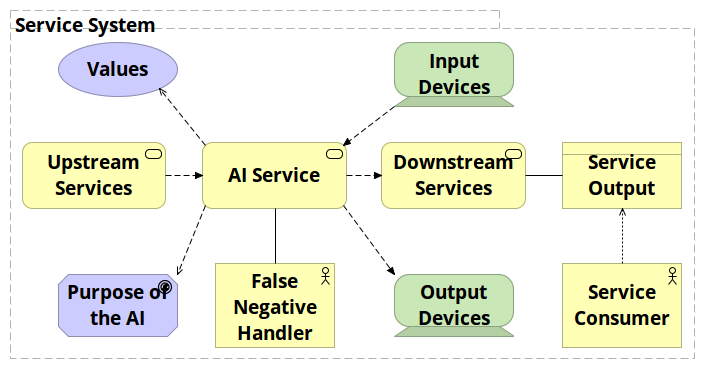

Integrating an AI into a Service System

Whatever the particular purpose of an AI, its general purpose is always to provide some advice that will be the basis of a decision. So, the overall service system shall need to make decisions based on the AI’s output. Furthermore, the service system will be providing input to the AI, thereby triggering the AI’s processing activities. AI integration is a matter of hooking up the AI to the components that provide the inputs and to the components that use the output.

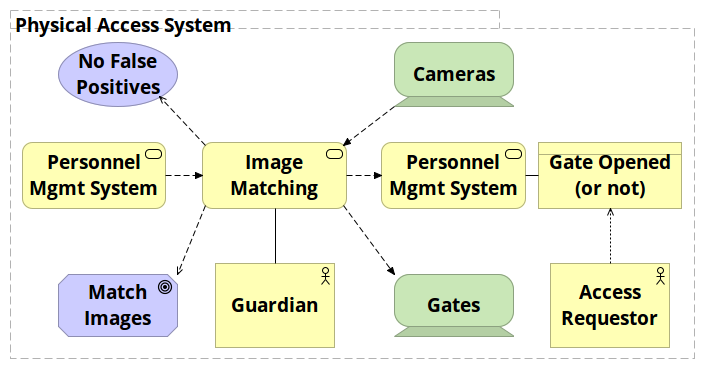

Suppose you have an AI component that attempts to recognize the pictures of people. The inputs will be collections of pixels that represent the images of people. The output will be judgements, associated with a certain probability, that the collection of pixels are the image of a certain person.

By itself, such pattern recognition is a sterile activity. But suppose it is integrated into a physical access management system designed to limit access to a building to authorized personnel only. In this case, there must be cameras used to capture the images of visitors and codecs used to transform the raw image into data usable by the pattern recognition system. In order to match images with known, authorized personnel, there must be a database containing the list of authorized personnel and the data to which the captured images need to be matched. The output of the AI needs to be sent to some signaling device, telling the person requesting access that they are authorized to enter (or not), as well as some form of gate or interlocking doors. The control event, whether it permits access or not, is likely to be logged somewhere. Finally, there may be some signaling to a human agent to handle false negative cases.

The output of the integration phase is a tested design that is ready to deploy into a live environment.

Deploying an AI

There is nothing, to my knowledge, that differentiates the deployment of an AI component from the deployment of any other IT component in a service system. There is, however, a particular aspect to deploying a trained and tested AI that gives it a huge advantage over humans.

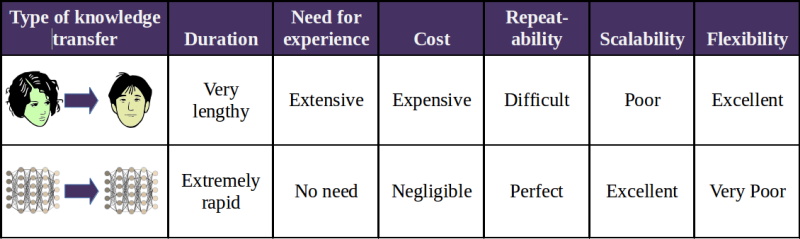

Suppose you have a human service delivery agent who has taken many months to learn the ins and the outs of a service and its customers. Now, suppose your business has expanded and you need another person to perform the same tasks. The experience of the first agent might help a little in training the second agent but, fundamentally, each agent must gain her or his own experience. In other words, training a human agent is not very scalable.

Compare this to deploying additional artificial intelligences. The “knowledge” acquired by any single AI can be redeployed in other AIs by a simple copy of the underpinning data and code. The entire experience of the first AI is immediately available to the second AI. Deploying multiple AIs is a highly scalable activity. Of course, the human agent has the considerable advantage of being able to flexibly reuse any acquired knowledge without explicit training. The copied AI would require explicit (re-)training even any reuse.

Operating an AI

AIs, being nothing more than computers programmed in a certain way using data structured in a certain way, are highly automatic. Thus, much of the operation of an AI is the same as the operation of any other software application. There are, however, a number of factors specific to the operation of an AI that merit further description. The most important factor, the continued learning of the AI, is so important that I treat it as a separate subject.

To understand the particular aspects of operating an AI we must recall that AIs based on neural networks are probabilistic, not deterministic, systems. The output of each layer of a neural network is associated with a set of probabilities. The final output is only ever an output of a certain probability. Compare this to the traditional, deterministic software application. Each command in software results in some unambiguous result. 1 + 2 = 3. If the value of an expression equals this, then do that, otherwise, do something else. Even if the path through software might be complex, there is no ambiguity about the final result.

Neural networks, on the other hands, yield results that are normally not 100% true. They are only probable. If a pattern recognition AI is asked if a certain set of pixels correspond to the image of a certain person, that AI can only say, “there is an x% probability that it is the picture of that person”. The output of a neural network is described as “probably approximately correct” (PAC). So, we need to understand how approximate it is and how probable that approximation is.

This concept should not be strange to anyone dealing with quality management. Suppose a company manufactures metal screws. Even if a screw is sold as being 3 cms. long, we all know that they are not exactly 3 cms. long (whatever “exactly” might mean). Instead, we define an acceptable range of length (say 3.00 ±.02 cms.) Then, we can judge the quality of the screws in terms of the percentage of defects (screws longer than 3.02 cms. or shorter than 2.98 cms.).

A similar situation exists for an AI. The mathematical model in an AI yields an approximation of what the output will probably be, given a certain set of inputs. The model is accepted for its ability to predict outputs, with a certain level of probability.

In Fig. 6, the data is modeled as a straight line, that is, a direct relationship of one input to one output. This model is not very good for predicting the output, especially in the higher range of values. In Fig. 76, the same data is modeled as a second order polynomial. It does a better job of approximating the values and will probably do a better job of predicting future outputs. Fig. 8 is an example of over-fitting the data. The model is perfect, insofar as the curve goes through 100% of the data points. However, this model will probably do a very bad job of predicting future outputs from future inputs.

The example above uses the case of an AI whose purpose is to predict a certain future value, given certain future inputs. But the same issue of probable outputs also exists for AIs that are only intended to analyze an existing sample of data or for AIs intended to predict future behavior of a system.

What does PAC mean in an operational context? It means that the service owner needs to define what output is probable and approximate enough to be useful for the purpose of the service system. In all likelihood at least two thresholds should be defined. Above one threshold, the output of the AI is so probable that it will be accepted as “true”, with no questions asked. Below another threshold, the output of the AI is so improbable that it will be considered as “false”.

In the latter case, a different way of solving the problem must be found, generally by applying the efforts of a human agent. There is perhaps a grey zone between these two thresholds, which might be handled on a case by case basis.

A simple example of how this works out in operations is in the use of a chatbot. Anyone who has used an intelligent chatbot (i.e., one using natural language processing), has probably encountered chatbot responses of the sort, “I didn’t quite understand you. Please rephrase your remark” or “To answer that, I need to refer you to a human agent”. In other words, the answers that the chatbot might ascertain, given the inputs it has received, have a probability below the acceptable threshold. In this case, the chatbot is programmed to either ask for clarification (in the hopes that it will better understand you) or ask if you want to be transferred to a human.

Thus, operating a chatbot is a case of determining how the chatbot will interact and collaborate with alternate means for achieving its purpose. I will return to this issue in a future article where I will discuss the role of the service manager relative to artificial intelligence.

AI Learning

Logically, the learning that an AI might achieve during operations is an extension of the training it underwent when it was first built. So learning is a form of continual improvement of the AI. There are various reasons why such improvement might be necessary:

- There are errors in the AI’s output

- The acceptable approximations and probabilities may need to change

- Trends in the inputs to the AI alter the desired output

- The structure of the model might require changing

Correction of Errors

At the end of the day, an artificial intelligence consists of code and data, just like any other software. As such, it is subject to errors that need correcting. Here are some examples of such errors.

Suppose the initial training of the AI used the supervised learning approach. Suppose, too, that a significant amount of the training data was mislabeled. As Yogi Berra might have said, this is Garbage In / Garbage Out all over again. If a natural language translation tool were initially given the wrong translations for certain terms, those translations shall need to be corrected. Or, the conversation flow of a chatbot might be mis-configured. As a result, it might jump to an inappropriate place and respond to input from a user with an inappropriate remark.

Changes to PAC

There are two typical reasons why an AI might have to make its output more probable and less approximate to be useful. On the one hand, the success of an AI will create a new frame in the minds of users for what is an acceptable level of performance. In time, the expectations of those users increase. They become less and less tolerant of the idiosyncratic improbabilities that an AI might emit.

Think of the online translation tools that have become so popular. At first, we were amazed that they could make any reasonable translations at all and we joked about the funny translations that were obviously wrong (to a human). But, in time, we came to expect higher and higher quality translations. There is also likely an effect where the pioneer users of artificial intelligence were much more tolerant of its idiosyncrasies that are those users in the mainstream. Moving into the mainstream requires increased reliability.

The second factor leading to the need for improved quality is competition. The technologies underpinning AI are evolving very rapidly. As new competitors enter fields using improved techniques, the quality of their services might leap far ahead of the established players. And so, those established players must meet or surpass the competition, else they will go out of business.

Trends in Input

Ideally, the initial training of an AI is done using data that is a good representation of the types and distribution of inputs that can be expected. Yet, what interests customers today is likely to change tomorrow. People think of new ways to use existing tools based on AI. Their interests change and so do the inputs they provide to AIs. As a result, the initial training of the AI may no longer reflect what is important to the customers.

As an example, one company specializing in the manufacture of women’s clothing created an AI to help customers find the right sizes and models to order. Those recommendations need to reflect the evolving fashions and changes to the availability of models. The tuning of the AI needs to take into account such data as the return of purchased items and the explanations of why they were returned.

Another example might be drawn from natural language processing. The rate of change in natural language use is very high. “Bad” comes to mean “good”; sentence fragments such as “truth be told” come to be understood as acceptable rather than moronic errors; international languages like English absorb cultural references from thousands of sources; dangling prepositions—a solecism up with which many could not put—evolve into standard forms that you get used to. If the purpose of an AI using NLP is to understood how people communicate, then they must be regularly updated with the new semantics, syntax and grammar found in the wild.

Changes to Mathematical Models

All of the reasons given above may ultimately lead to changes in how AIs model the relationships between the inputs and outputs. In addition, changes to the purpose of an AI or to the scope of problems that the AI is intended to resolve are likely. For example, image recognition tools generally started as tools to identify only the foreground subjects of an image. In time, the context of the foreground came to be important, too, whether the purpose is to identify the background, or simply to blur it.

As the use of AIs becomes prevalent, the needs for making them effective become better known. As a result, efforts are made to make available data that improve the effectiveness of the AI. As new types of data become available, so may the correlations among data types and the most useful way to model those relationships.

A Simplified Presentation

The model I have described above is, as with every model, an over-simplification. There are two aspects that the model does not treat adequately. First, are the iterative, cyclic aspects of designing and using an artificial intelligence. I have lamely indicated them by the use of arrows and circles in the diagram of the model (Fig. 1).

Second, the model is presented from the point of view of the service provider, which is but one component of a service system. Equally important to services are the consumers of services. Service regulators and service provider competitors have their place in any service system, too. There are many other actors in a service system. We can summarize their effects by speaking of a general environment or context for services. This environment acts as an evolving catch-all for these other system components whose influence might be difficult to measure.

I justify this over-simplification by assuming that the mission, values and strategies of any single service provider should reflect the influences of these other components, just as they contribute to shaping the system as a whole.

No service provider is an island,

Entire of itself,

Every provider is a piece of the continent,

A part of the service system.

At the time of this writing, two more articles are planned. One will summarize what I believe a service manager should understand about artificial intelligence and machine learning. Another will discuss the responsibilities of the service manager for artificial intelligence, using the model described above.

![]() The article A Model of Artificial Intelligence in a Service System by Robert S. Falkowitz, including all its contents, is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

The article A Model of Artificial Intelligence in a Service System by Robert S. Falkowitz, including all its contents, is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Notes

Dear Robert, I have gone through your article and believe me I am not an expert in the field.I thoroughly enjoyed the article as it was more a learning for me.

I am basically a Lean Practitioner, but definitely, this is another Lean Tool that can be used effectively in different industries, offices etc.

Thanks

It is fair to say that a lean approach has strongly influenced the thinking that has gone into this discussion. That being said, the model itself is not explicitly lean and can be used in virtually any management approach.

Thanks a lot. Interesting as always and I agree and support. This reminds me of a well-known case when it was suddenly determined that three of the four algorithms that have long been used in magnetic resonance imaging, work incorrectly. I. e. something similar to the artificial intelligence has long been sewn into an important medical device, and everyone believed it. In other words, our lives often depend on AI, whether we want it or not, whether we know it or not. This makes us pay special attention to the potential danger of working with AI not at the expert level. So this is not just a program, even called fashionable words. To use complex technologies just “to be on hype” does not mean to be correct by definition. Rather on the contrary, I think. To make artificial intelligence work well you need a great natural intelligence.

Following up on your last point, Tatiana, perhaps a simple analogy is useful. You are lost in the woods and your only tool for finding your way is a magnetic compass. But, as we know, the magnetic poles can wander about quite a bit, and much faster than our homes, mountains and lakes wander about. So, we can trust the compass only insofar as we understand how it works and have some means of calibrating it and deciding if it is useful for our purposes.