Start simple

I told my customer to start managing changes by using the simplest possible activity for change control, and then use a continual improvement approach to develop that activity in a way that meets the real needs of the organization and is also acceptable to the stakeholders. Let me call that simplest of processes a “minimum viable process”.

Term derived from Lean Startup

I have pirated this term, which is already being used is a somewhat different context. Students of the lean startup approach to managing an organization are familiar with the concept of the minimum viable product. They have extended this concept, by analogy, to the concept of a minimum viable process. Typically, they consider a minimum viable process to be the iterative process by which a minimum viable product is created and evolved. I would like to use the concept somewhat differently. In particular, I would like to examine what minimum viable service management processes could be and how they might lead to much clearer understandings of how to manage services.

What is a minimum viable process?

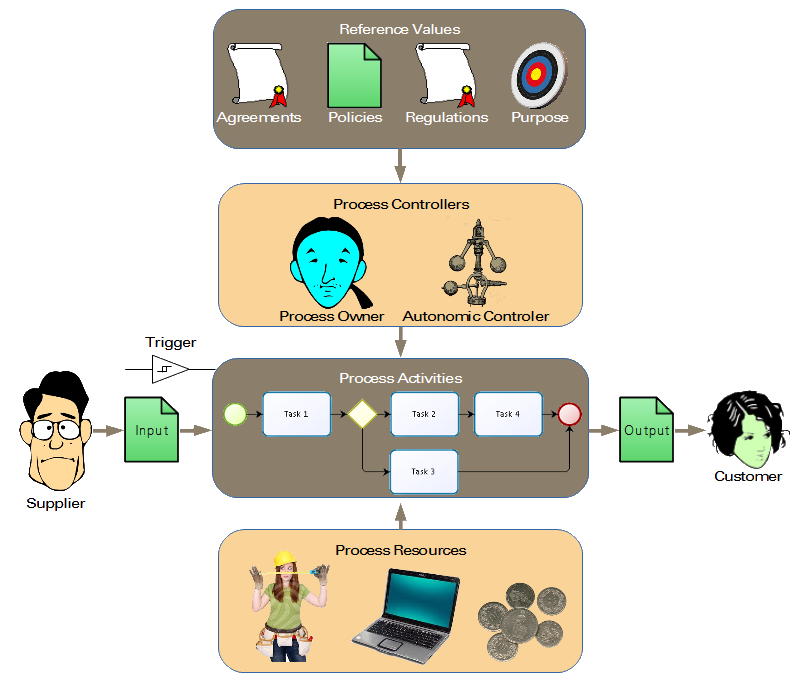

So what do I mean by “minimum viable process”? I mean very simply the process that can achieve its purpose with the least possible amount of definition and elaboration. As you know, we may define many different process elements:

- the purpose of the process

- the suite of activities to be performed, and in what order

- the triggering mechanism

- the inputs and outputs of the various activities (and hence of the process as a whole)

- the suppliers of the various inputs and the customers of the various outputs

- the rules, policies and other constraints that should be respected in performing the process activities

- the resources required to perform the activities of the process

- the tools used by those resources to support the execution of the process

- the process roles and their responsibilities

- the mapping of the organizational structure to the process roles

- the process metrics

- the expected levels of performance

I believe that a minimum viable process must have defined:

- its purpose

- its customers

- its final outputs

- its triggers

With those elements identified, it is possible to do useful work, work of value to someone. In other words, we need to know when to do the work; why we are doing it; what it needs to deliver; and to whom the output should be delivered. We are also able to know when the process should be used and how it is distinct from other processes.

Is this really a process?

Some might say that the purpose of the process need not be defined. Well, it might be true that someone can execute a process without understanding its purpose, if only the details of the activities and inputs are defined. Perhaps the executor of an activity does not understand its purpose, but whoever defined the activities and inputs must have had some understanding of it.

What about all those elements that I claim do not need to be defined? Surely the process activities need to be defined, you might say. Isn’t that precisely what we mean by a “process”?¹ From a customer’s perspective, however, the definition of the activities is largely irrelevant, so long as the output is delivered. Indeed, many organizations do the same work each time in completely unrepeatable ways. And work is often done in organizations without bothering to define roles and responsibilities. It is obvious that even a minimum viable process needs resources in order to be performed. My point is that it is not obligatory that those resources, and how they are mapped to process roles, be defined in advance of process execution. One can easily imagine an organization, especially a small start-up, where one uses whatever resources are available to do the needed work, and those resources can change with each process cycle. Similarly, I have excluded the process owner from the minimum viable process because it is not strictly necessary that someone play on on-going role of governing and improving the process. However, if it clear that the elements of the minimum process—the trigger, the outputs, the purpose and the customers—must be defined by someone or some group. If this means that you would include the role of owner among the minimum elements, so be it.

Note that most process maturity models speak of a level “0” or “1”, wherein the process’ activities are not defined or, at least, they are not defined very much. In short, all of those process elements that we have been encouraged to define by various frameworks may have some value (in certain circumstances), but are not strictly necessary.

The minimum viable process and so-called “best practices”

Once an organization has defined a minimum viable process and its members do the work of that process, they are faced with the specific issues of what works well and what does not. For example, they might find that the process is not performed in a timely way, or is not done at all. The people concerned might decide to resolve this issue by clearly defining the process’ roles and responsibilities, or by specifying the activities to be performed.

Now, the argument of those who were raised on the milk of so-called “best practice” frameworks is that the organization has wasted time by not defining those roles at the outset. It has been inefficient and ineffective by ignoring the practices that have already been identified as well established.

But have they? Look at the typical project to implement “best practices”, where it takes months (or longer) to identify process gaps, to select and configure tools, to train people and to try to convince of the benefits of working according to a certain process. And at the end of that project, there are still many recalcitrant stakeholders, there is still a lot of improvement needed in the process, there are inevitably a series of compromises between what the process expert thinks ought to be done, and what the process stakeholders accept to do. All too often, such projects are even cancelled before they ever deliver any benefits.

Benefits of starting with a minimum viable process

Here are some of the benefits of starting with a minimum viable process which each team of stakeholders will evolve according to its own needs and capabilities. The stakeholders are empowered and responsibilized from the very start. Continual improvement is embedded in the culture of the organization, rather than being implemented as an after-thought. Improvements are made in response to the real problems faced by the stakeholders, so they are self-motivated and do not have to have their arms twisted to buy in to the solution. When improvement are done in small batches using short feedback loops, the benefits of the process improvement are realized much more quickly than if one had to wait for a large, complex project to come to term. And if the “improvement” turns out to be a dud, it is much easier to back out of it and try something else, instead.

Furthermore, because the process is defined in its most spare form, it is as lean as possible from the start. Unlike cases where ready-built processes are implemented, there is no waste built into the process at the very start. Minimum viable processes are unlike those processes recommended by so-called “best practice” frameworks that include all sorts of validations, other controls and activities that do not directly increase the value of the process’ output.

Of course, a process may have other elements defined, such as its activities, and still be a lean process. The issue is whether an organization should start with a minimum viable process and evolve from there, or whether it should start with what it is already doing, as recommended for Kanban², or whether it should start with a process defined in some framework. I do not recommend the third option, because of the waste inherent in most of these processes and the problem of imposing a process without the required, underpinning understanding of that process. The second option is a good one if the organization has a well established track record of work according to some process. But the first option is of particular interest when the organization is simply not doing the work of a certain process, if there is a greenfield situation. This is very often the case with respect to service management processes. How many organizations are really managing availability (most of the ones who claim to do so are really just giving the name “availability manager” to a role that is little more than an incident manager role)? How many organizations have a process to manage a service portfolio? Or manage what some would call “business capacity management”? Or, for that matter, most organizations I have ever seen either completely ignore configuration control, or they use automated tools to collect configuration data and pretend that they are thereby managing configurations.

Minimum viable process and process metrics

Most management frameworks emphasize the need to measure processes as a key element in ensuring the quality of their output and in improving them. The minimum viable process has everything defined to allow the most important measurements. Effectiveness is measured by comparing the output to the purpose. Process lead time is an efficiency metric. The compliance of how people work to policies and laws can be measured in any case. Measurement of compliance with the agreed process is also possible, especially regarding the respect of triggers and the delivery of output to the right parties. Of course, the more you agree on how to perform the process, the more you may wish to measure compliance with that agreed way of working. This leads us to the question of measuring process progress.

Progress may be measured from two perspectives. The first looks at the degree to which the potential customers, suppliers or inputs of the process are being served using the process. For example, there may be a configuration control process that is applied to only 60% of the configurations items in the organization. Or a change control process might be applied to only certain types of changes requested by certain process customers. The minimum viable process is perfectly measurable against this type of metric.

The second perspective of process progress concerns the degree to which the elements of the process are defined. When considered from the perspective of the minimum viable process, it becomes highly debatable whether a process with more elements defined shows greater progress than a process with fewer elements defined. In short, I reject the assumption that more is better, if applied in a general way, across all processes.

Under what circumstances is defining more process elements a form of progress? It depends on the degree of variability in the process inputs. Those inputs can vary in terms of their batch size, their complexity and the cadence at which they arrive. If all inputs are of identical batch size, with identical attributes and arriving at fixed intervals, then it is highly likely that a highly defined, rigid process is the most efficient way to produce the required outputs. An extreme example of this would be the ideal state of one piece flow.

However, for processes in the realm of knowledge work, the degree of variability in the inputs makes one piece flow quite impossible (at least, in the current state of the art). In such cases, it serves little purpose to define a process down to the smallest details, as the resulting process would be unable to handle the large number of exceptions that would inevitably occur.

Minimum viable process and adaptive case management

When a process must expect and handle the unexpected, greater definition of detail creates an unrealistic set of constraints on the work, making it much less efficient, with a higher level of coordination effort. When variability in the inputs of the process is high, the handling by the process unfolds as those inputs arrive and their nature analyzed. The best example of this is the process of solving a crime. The clues enabling a solution arrive in no fixed order, at no fixed time and can be very different from case to case. From a minimum viable process perspective, this variability poses no difficulties. The output is always the same (a list of suspects with their motives, opportunities and weapons). The purpose—to identify the probable perpetrator—is unchanging, as is the customer for the process, namely, the judicial system that will try the case.

This adaptive approach to handling cases is applicable to a wide variety of domains, including much of the work of managing services. As I have already described the use of adaptive case management for service management, I will not go into greater detail here.

Perhaps the best example, though, would be problem management. We know why we wish to manage problems (to reduce the impact of incidents caused by those problems). We know what it must deliver as output (either an improved workaround or a definitive solution). We know to which customers that process output must be delivered (to the people resolving incidents or the people making the changes to remove the causes of problems). And we define what triggers problem management (the criteria for deciding which problems to track or to investigate). But, there is no fixed set of steps that are guaranteed to lead us to any particular result. To paraphrase Tolstoy, each problem is unhappy in its own way. While we do have an arsenal of methods useful to manage problems, it behooves us to apply those methods in a flexible way, only when the required inputs for them are available and if the method is appropriate to the desired results and the available resources.

![]()

¹There is, of course, a great deal of confusion in the IT service management realm about what really constitutes a process. The fault lay entirely with the various frameworks we use that have introduced the confusion between a process and a discipline. The discipline of service level management, for example, actually includes at least 4 or 5 processes, each having its own triggers, activities and outputs. And so, although the ITIL® glossary provides a definition that corresponds largely to the traditional, received understanding of the term, chapter 4 of each ITIL volume belies that definition, naming what we should be calling “disciplines”, as “processes”. As a compromise to resolve this dilemma, I propose that the term “process” be simply defined as “structured work”.

²As recommended by David J. Anderson in KANBAN. Successful Evolutionary Change for Your Technology Business.

Very nice and intuitive. I think many, including me have felt this. While we are all process folks, i have questioned the need for a defining a process or putting elements before understanding the need or purpose. Especially the users of the process elements face the heat during operations and without understanding the purpose make arbitrary mandatory rules, leading to in some larger org context a bureaucratic rules which tend to solidify and make bottle necks

Robert, I have been a great admirer of your work. No offenses however this one startles me for a number of reasons.

1. Please forgive my ignorance, however for me the message is not clearly coming out, in terms of what is being conveyed.

2. I guess we all are already doing so. In absence of being able to define the complete process and the associated activities with Input, Process itself and the Output at the outset, we are anyways doing a bare minimum process setup and moving forward.

3. This is what almost everyone does and then we inculcate CSI or Process Maturity (CMMI), which happens over a period of time.

4. Bare minimum process setup is not by choice however most of the time it is by force of nature of the business. Or by force due to the stringent timelines to initiate KT and start the delivery.

5. Relaxed SLA / Lean Period of first X months is actually to leverage time for Service Provider to fix and streamline the gaps, be it technical, skill, process or partners and ensure structured delivery post that said period.

6. Also most of the process improvement even without any intervention from CSI / SIP happens during the stabilization period of that initial X months and the process maturity improves from 0 to maybe 2 / 3.

Having said that I really appreciate the time you have taken out to structure the thoughts. This is definitely a starting point for everyone and now your structure will further strengthen their approach.

Satyendra,

Your experience does not correspond to mine. In my experience, when organizations evolve processes they tend to make them more complex, not simpler. When they are under pressure to deliver some process, they frequently focus on the documentation of the process rather than the reality of what happens. In consequence of that, certain activities are defined and tools configured, but often the purpose of the work and the goals to achieve are simply left undefined and uncommunicated. The result is that people do work without understanding why they are doing it; since the process is defined in only broad outline it leaves a lot of leeway to the persons involved and without the guidance of understanding the purpose of the process, that purpose is often not achieved. I would challenge anyone to ask the people doing work to explain succinctly the purpose of that work. In my experience, the vast majority simply does not know. They say, instead, that the purpose is to follow the defined process or to achieve a certain quality level or to manage some entity or some other inanity.

The scenario to which you allude in your points 2 and 5 is the antithesis of what I am proposing. Rather than doing a shoddy job and then catching up once you use the process in production, I believe that the components of the minimum viable process provide a coherent and efficient way of working, so that the improvement cycles in production focus on adding value rather than fixing things that are broken.

Robert, sure that makes sense and that is exactly what I started with saying “for me the message is not clearly coming out”. However with your kind explanation I do understand now. Thanks for clarifying.

Robert best part about minimum viable process as you mentioned under benefit: each team of stakeholders will evolve according to its own needs and capabilities. Continual improvement is embedded in the culture of the organization, rather than being implemented as an after-thought. Improvements are made in response to the real problems faced by the stakeholders, so they are self-motivated and do not have to have their arms twisted to buy in to the solution.

So this definitely is following lean principle…no wastage in any form.

Hi Robert, it is quite usefull approach when you need to define new process. But I often have a problem that somekind of minimum viable process exists and it is not fit for purpose. For example: General ticket management instead of incident, problem, change management. Then it is really hard to change the way of working and awareness. So I see purpose definition as a CSF and best practice as a usefull for inspiration and process relationships identification.

In general, I agree with you, Miroslav. The danger I have seen too frequently is that people use so-called “best practice” not as an inspiration, to be adapted as needed to the reality of the situation, but as the gospel truth, to be used without understanding. Insofar as making improvements is essentially a form of problem management, the danger is to make changes without understanding deeply enough the underpinning causes. The decision to use someone else’s process as a basis for one’s own does not really address that issue.

Sure! Purpose is the most important aspect of a process to me. With this, we can configure the enough elements and practices to deliver the expected output for customer. After this first implementation, and only as necessary, we can integrate other elements and practices as the customer’s operation requested them.

Greetings from Mexico. DMT