A continual improvement maturity model♠

Over the past year, I have worked with several organizations wishing to start the continual improvement of their services and how they manage them. Continual improvement can be a profound cultural change for an organization. Many teams find it difficult to appropriate and internalize the principles of continual improvement. And so, I found it useful to model the developing maturity of an organization’s continual improvement capabilities. I wish to share with you this model.

This continual improvement maturity model is not intended to be a one-size-fits-all model. While it was developed based on the observed changes in various teams in very different types of organizations, I have direct knowledge of only a tiny fraction of the organizations trying to use continual improvement. Therefore, I hope that interested readers will share their own views and experiences. I have not found any other published maturity model for continual improvement, even though such models are in use in some organizations. I hope they will collaborate in the development and the evolution of this maturity model.

Please note that a maturity model is not intended to document everything that could or should be done to improve continually. There are many factors that might influence continual improvement that are not a question of maturity. Two simple examples would be the organization’s structure and the amount of resources being allocated to continual improvement. An organization is not more mature just because it spends more money on the activity, just as it is not necessarily less mature just because it has a command and control approach to getting work done.

Yet another maturity model?

Some of the generic process or organizational maturity models don’t really help to develop an organization’s ability to improve itself continually. Even if you consider continual improvement to be a process (and I do not – see below), calling it undefined, or measured, or adaptive is of little use figuring out what to do next. I focus instead on a set of axes for increasing maturity, based on experiences in a variety of organizations. These axes are largely, but not entirely, independent of each other. That means that an organization may have different maturity levels along each axis, and each organization may find its own path for improving its capabilities.

Along each axis, I define six levels of maturity, each level specifically related to the issues of implementing a continual improvement approach. There is nothing magic about the number six. In fact, it does not fit very well on certain axes, where three or four might be a better number. But there is something familiar about that number, as used in maturity models. Too, it facilitates the creation of diagrams to compare and contrast maturity statuses. In time, it may prove more useful to reduce the number of levels in each axis.

The scales on the axes are intended to be ordinal, where zero is the lowest maturity and five the highest. But the intervals themselves mean nothing. An organization rated at four on a certain axis is not twice as mature as another organization with a rating of two.

The measurements of maturity along each axis are intended to be lead indicators for the value that may be obtained from continual improvement. Since the model itself has not yet been applied to a large sample of the overall population, it is too early to determine if, and how, those indicators may be related algorithmically to obtained value. That is a subject for future research.

The different levels on each axis are defined such that an organization will normally pass from one level to the next, without skipping levels. Attempts to skip maturity levels are not likely to bear fruit.

Finally, the levels are intended to reflect what an organization has already achieved, rather than what that organization is currently attempting to achieve. The temptation to rate oneself at a higher level, merely because you are working on developing that capability, should be resisted.

In the following sections, I describe the six axes of maturity for continual improvement. For each axis, I provide a name, a description of the capability, a suggested way to measure the capability and a description of the signs that each level has been achieved.

Understanding

To what extent does the group understand the nature of continual improvement, why it is desirable and what are its benefits? Is this understanding made visible in the discussions and decisions made by the group or does it remain largely theoretical.

Continual improvement, as an approach, may be contrasted with other approaches to change and improvement in an organization. The most common of these other approaches is change via projects having fixed objectives, resources and duration. Understanding, then, is often manifested as an understanding of the difference between continual improvement and projects.

| Level | Description |

|---|---|

| 0 | The group has no awareness of continual improvement or its benefits, as a distinct approach |

| 1-2 | Certain group members understand continual improvement, but they do not yet dominate the understanding of the group as a whole |

| 3-4 | The group as a whole is aware of continual improvement as an approach and is able to cite its benefits |

| 5 | Continual improvement has been fully integrated into the vision and mission of the group |

Intent

To what extent does the group intend to improve continually, independently of its capacity to do so. Intent is a measure of commitment, engagement and motivation.

| Level | Description |

|---|---|

| 0 | Continual improvement is not on the agenda of the group. No one talks about it or shows any interest in the concept |

| 1-2 | The group members have started to gain consciousness of and commitment to continual improvement, but view it as something they do in addition to their “normal” work, to be performed if time is available |

| 3 | Continual improvement is integrated into the mission of the group and its members largely agree that a continual improvement is desirable and steps are taken to ensure sufficient time to perform improvement activities |

| 4 | Continual improvement has a high priority among the group members and the actions undertaken by the group |

| 5 | The members of the group have fully integrated continual improvement and consider it as part of their normal work |

Method and Tools

To what extent does the group have a method and related tools to make continual improvements. This axis does not measure whether or not such a method exists, but rather the degree to which the method is being used. If the Understanding axis concerns the “What” of continual improvement, the Method and Tools axis concerns the “How”. What is the maturity of how the group is improving.

| Level | Description |

|---|---|

| 0 | The group has no method, approach or tools to realize continual improvement |

| 1-2 | The group experiments with various methods and tools to support continual improvement, often with methods in conflict or with redundant tools |

| 3 | The group has standardized on one or more methods and supporting tools and has uses them regularly |

| 4 | The group can effectively apply its method and use its tools not only internally, but also in relation to the work of other groups |

| 5 | The group has shown the ability to tune and adapt its methods and tools as required by changing circumstances |

Cadence and momentum

How consistent is the cadence of improvement implementation? This axis links directly to the issues of group dynamics and how to maintain momentum in the work of a group. This, in turn, is related to the issue of leadership within a group. I consider the issue of leadership to be generic to any group and not specific to the question of continual improvement. But, because it is one of the main issues facing any group, I will add at the end of this article a small annex talking about how leadership and continual improvement may be related.

There are two fundamental metrics of this axis:

the variance between actual improvement delivery cadence and the mean delivery cadence, or takt time, over a given period

For example, if 12 improvements are delivered in 360 days, then the mean delivery cadence would be 1 improvement per each 30 days. But the actual cadence might be, for example, 2 improvements in 90 days, followed by 1 improvement in 20 days, then 1 improvement in 45 days, etc. It may be difficult to maintain interest and momentum if the variance is high.the acceleration of the cadence of improvement implementation

As a group matures, it should be able to deliver more and more improvements, at a faster and faster cadence. A running average takt time, measured over a useful period (for example, six months) will have a slope of 0 if there is no acceleration, a negative slope if the rate accelerates, and a positive slope if the group is dysfunctional.

| Level | Description |

|---|---|

| 0 | Improvements, to the extent that they occur at all, occur in a completely unpredictable, seemingly random way. In short, they are few and far between. There is no sense of urgency to make improvements |

| 1-2 | The group shows periods of a sustained cadence of improvement, but lapses into periods of inactivity from time to time |

| 3 | The group is able to apply its adopted methods and tools to deliver a steady flow of improvements |

| 4 | The group is not only consistent in its ability to deliver improvements, but it demonstrates the ability to accelerate the rate at which it delivers improvements |

| 5 | Not only does the group accelerate the cadence of its improvements, it shows itself capable of making breakthroughs in barriers to improvement. These barriers are the cost-effectiveness barriers beyond which it is undesirable to go, unless breakthrough innovations are made |

Performance

How efficient and effective is the group in implementing improvements. This axis is largely a measure of the ratio of the effort required to implement improvements to the effort dedicated to planning, coordinating and controlling its own activities. The effort required to implement improvements corresponds to what Lean calls value-adding activities, or what Kanban calls transaction costs. I refer to the other types of effort as “coordination effort” or even “coordination cost”.

The fundamental metric of this axis is the ratio of coordination effort to improvement implementation effort.

| Level | Description |

|---|---|

| 0 | The effort of the group is completely disproportionate to the few results it demonstrates. The effort of the group is largely devoted to planning, coordination and control activities, rather than implementing improvements. The group is frequently blocked in its activities |

| 1 | The effort of the group devoted to planning, coordination and control is still very high, but the number of blockages is reduced and some simple improvements start to be delivered |

| 2 | The group continues to lower the ratio of coordination activities to implementation activities, especially for quick win type improvements of limited scope and complexity |

| 3 | The group is able to identify and implement improvements over a range of complexity with few false starts and few activities that fail to deliver the expected improvements |

| 4-5 | The group is able to implement improvements of varying scope and complexity, with only a very small fraction of its effort being devoted to planning, coordination and control. This level is typically the result of having fully appropriated the adopted methods |

Value

To what degree is the group able to manage improvements for value. This axis is distinct from the actual value obtained from any improvements. All the axes of maturity should be taken together as lead indicators of that economic value, which should be measured independently of and as corrective to the efforts to improve the continual improvement capabilities.

The fundamental metric of this axis is the degree to which a group consistently succeeds in implementing improvements that have been prioritized on the basis of their value to customers.

| Level | Description |

|---|---|

| 0 | The value of the improvements is not taken into account at any phase of identifying, prioritizing, designing or implementing improvements |

| 1 | The value of improvements is taken into consideration on an ad hoc basis |

| 2 | The group has a method using an ordinal scale for estimating the value of the improvements it proposes (for example, High/Medium/Low) and uses that method to prioritize the proposals |

| 3 | The group uses numerical methods to assess value in economic terms, in addition to the capabilities of level 2 |

| 4 | A feedback mechanism enables the group to assess and improve the accuracy and pertinence of its value assessments, in addition to the capabilities of level 3 |

| 5 | The group demonstrates its ability to consistently predict the value of its improvements within a small range and with a high degree of probability |

Reporting on maturity levels

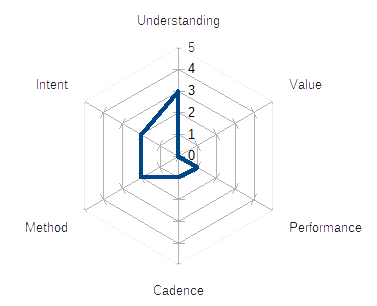

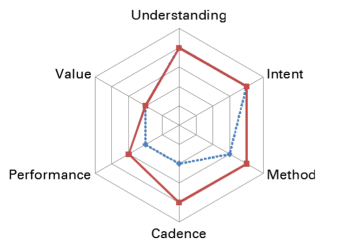

Once the maturity of an organizational unit is assessed, it is useful to share that information. A radar chart is perhaps the most useful graphical way of displaying the results of an assessment, An example is shown in fig. 1.

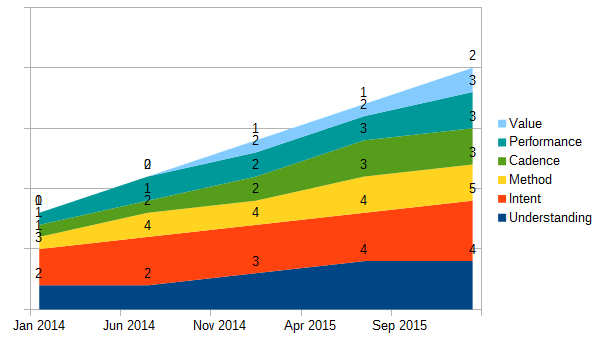

It is also useful to show the evolution of maturity levels for an organizational entity over time. A radar chart can be very difficult to read for such purposes. A stacked area chart might be somewhat easier to interpret at a glance, as shown in Fig. 2.

Is continual improvement a process?

If I ask this question, it is not to get lost in a pedantic discussion about what we mean be processes. My purpose, instead, is to underline my belief that the most effective form of continual improvement is, by far, the improvement that has become embedded in the daily working practices of everyone in an organization. I gave an illustration of how this could work in my presentation Daily Improvement with Kanban. This is also a fundamental assumption of kaizen, [WCAS 2.0 friendly version] as a component of the Toyota Production System. But when we start to treat improvement as a separate and distinct process, with its own process owner, its own managers and its own tools, then continuous improvement tends to be considered as something you do in addition to your own work, rather than being a fully integrated part of that work. In my experience, such an approach will have unnecessary difficulties in getting of the ground, will never really fly and will tend to require high levels of overhead just to keep it going.

Leadership and Continual Improvement§

As I indicated above, I believe that the role of leadership in a group is a generic issue, not one specific to continual improvement. However, the internal organization of a group is often one of the major blockages to increasing the maturity of continual improvement. Therefore, I propose here a set of leadership maturity levels.

| Level | Description |

|---|---|

| 0 | There is no leadership in the group |

| 1 | A central leader emerges who champions the effort of the group, but leadership might be contested |

| 2 | The central leadership is consolidated, perhaps after some false starts |

| 3 | The leadership of the group becomes increasingly distributed, with more and more group members playing leadership roles |

| 4 | The need for leadership, as a specific type of activity in the group, becomes less and less important, as all group members become more confirmed in their dedication and motivation |

| 5 | The usefulness of leadership has withered away, as all group members are able to agilely support the mission of the group without the need for leadership from another |

You will see from table above that I do not consider leadership to be a sine qua non for the success of a continual improvement initiative. It is, however, a very important factor in the early development of any group that, in turn, contributes to the increasing maturity of that group and success in fulfilling its mission. In short, one of the hallmarks of a very mature group is the lack of strong, centralized leadership. That being said, most groups are not very mature, so leadership is indeed a major issue for them.ƒ

I should add here that as the mission and vision of a group changes, its need for leadership may also change. Therefore, increasing leadership is less of a slope to climb and more of a series of cycles or spirals.

ℑ ℑ ℑ

If you find this model useful, please let me know. All suggestions for improvement, if based on real experience and not just theory, are welcome.

![]()

♠I have now added another dimension to those described in this article. Please see Collaboration Maturity Levels, too.

§Many thanks to Jan Vromant for raising the issue of leadership in this context.

ƒThe reader will readily make the connection between the maturity levels proposed here and Bruce Tuckman’s Forming, Storming, Norming, Performing model for group development.

This is absolutely great

My experience: it is indeed possible to use a well-defined methodical approach towards improvements. The ISM Method ) is based on a solid structure that has been proven in practice many times.

I’ve learned to limit the cycle (takt) to a 3 month period, as this was always limited by the pressure of regular work.

The improvement cycles can be structured, in the way they select their goals from a set of options (problem inventory), and in the way they are planned, coordinated, managed, reported, etc.

The ability to improve is limited by the ability of the management to focus on improvement. It is very easy for managers to get distracted by ‘projects’, urgent changes, etc. They tend to grab avery opportunity they can find to escape from a structured improvement process. That means that continual improvement is more of a cultural thing than a technology thing; it is highly dependent upon he awareness of quality in the group. Most of your axes are depending upon that culture.

– Understanding: this directly refers to the culture, the quality awareness.

– Intent – same here.

– Methods and tools: again the same.

– Cadence/momentum and performance: these are more technical skills related aspects.

– Value: this is the core axis. Everything should be ultimately be measured here.

Is it a process? Not in terms of managing a new set of activities; it should simply be incorporated in the current line of duty. again: a matter of culture, determining its success.

Leadership? This factor highly influences the culture. nice axis as you described it 🙂

Some minor comments:

– “with skipping levels’ should probably be “without skipping levels”

“Method and tools” seems to refer to the most important capability of consistently working on improvement, instead of referring just to the instruments…

On “Cadence”: improvement is always competing with day-to-day business. The idea that improvements can always be accelerated would mean that – in time – you would spend far more time on improvements than on delivery itself. That is an unrealistic idea.

“Value” is a core axis, but also one of the most difficult ones. Just think of all the times we have been trying to prove the value of IT itself…. If we can’t really quantify that, how could we quantify improvements of that value…

Thank you for your thoughtful comments, Jan. I have corrected the error that you indicated.

While I disagree with most of what you say, I would like to single out just a few points that I find the most egregious.

While you are perfectly correct in talking about culture, I think it is a mistake to consider culture only at the level of the enterprise. In my experience, different units within an enterprise and, indeed, different teams, can have vastly different cultures. Furthermore, I find that most discussions (not yours) about “culture” leave the question at such a high and fuzzy level that the discussion really doesn’t help anyone. Instead, it tends to portray “culture” as some insurmountable monolith. This view does a disservice to anyone trying to improve things.

A maturity model is, of course, not at all a process. I strenuously object to anyone who might portray it as such.

You have misunderstood my portrayal of the “value” axis. I explicitly stated there that it is NOT about how much value the improvements bring. A simple thought experiment will show that an immature group can make an improvement – typically grabbing some low hanging fruit – that brings a lot of value. But that same group might be incapable of doing any other improvements, making a mockery of being “continual”. I agree completely that improvements need to be measured relative to the value obtained from them and that this is indeed a bottom line item. So, kindly re-read my description of the value axis.

I do not agree that cadence is a technical matter. For me, it is fundamentally the result of the attitudes of the people involved. It is also influenced by the capacity of the method to break down improvements into small, digestible, pieces.

Finally, regarding the method in use, I do believe that there are methods that allow organizations to dissolve the mental model that contrasts “daily work” or “business as usual” with “improvement”. I believe that it is possible for a method to embed continual improvement into daily work. In this respect, some methods are probably more useful than others when it comes to increasing improvement maturity. Indeed, the whole issue of making improvement an inherent part of your “culture” is measured in several of the axes.

I don’t know if there aren’t any other maturity models around, but I surely like the one you elaborate here! Understand and support your reasoning.

Markus, I don’t think any of the standard frameworks cited for service management really address the issue directly. ITIL merely refers to using the maturity model in COBIT. It also provides guidelines for implementing CSI, which is just an adaption of Kotter’s change approach. But that is not at all the same thing as a maturity model. COBIT and ValIT (with which COBIT ultimately aligned itself) use what has become the traditional levels of maturity, but are not focused on the practices of continual improvement, per se. So they are much too high level for my purposes. The same is true for most of the other maturity models I have seen. They all think along the lines of, “If you use a maturity model, that will help you to continually improve.” But I am turning the question on its head and focusing on the specific practices of continual improvement. If you will, I am looking at continual improvement of continual improvement. I am not aware of other models that take this approach.

Robert

I think this is a good idea but there are a few things that I want to comment.

1) It is a bit too complicated with too many axes.

2) Predicting value exactly can be difficult. For example an innovation can cut risk significantly but not remove it completely. I don’t think you can predict the value. In other cases there are always many factors changing at the same time and exact measurements are not possible.

3) Is the speed of improvements really necessary or good thing. Maybe yes if the level is bad but no if it is already very good.

4) I think leadership is needed but a good team needs less of it in their daily work. This can be semantical. I think you actually mean management more than leadership.

1) I appreciate that there are more axes than I would have wanted. But what to remove???

2) I fully agree that most organizations have a great deal of trouble predicting value with any degree of reliability. This is one of the great mysteries of modern management, in my view. But I do not agree that it is hopeless. I do think most organizations can predict value with 2-3 orders of magnitude greater accuracy than is usually the case (and the usual case is slightly worse than a random guess). I think the advice of Douglas Hubbard in this regard is an excellent start. The fact that it is difficult to disambiguate the cause of changes in value is noted and agreed, though.

3) It is not so much the speed with which improvements are delivered as the fact of delivering them with a regular cadence. I have observed the changes I document in various organizations. Underlying my interest in cadence is the belief that you want to be good at defining your problems and improvements in small increments. As soon as you can do this regularly, you can also deliver improvements in a regular cadence. But if you stick with the big project approach to improvement, then it is indeed difficult to maintain any sort of regular cadence. But think about any service you use? Which is better: seeing regular but small improvements that indicate the provider is aware of the needs and is dedicated to making changes in a reasonable time; or having to wait a year or two to see any changes at all (by which time you may have simply dropped the service in favor of another, or your own needs have changed, so the “improvements” may be solving a problem that no longer exists). What I do NOT believe is that a provider will ultimately reach a level where improvements are no longer needed. All we see today points to the need to constantly be able to accelerate how fast we do things. In any case, I am not so much worried about the completely dysfunctional organizations that would do everyone a favor by going out of business (and there are far too many of these).

4) I certainly do NOT mean management. And I think I agree fully with you. Thus, my mention of the withering away of leadership as a team reaches the most mature levels.

Good article Robert. Here are my two cents on this subject. I work on the ISO committees for different standards, 20000, 27001, 38500, 30105, and 15504 (330xx) to name a few. In this list there is a series that deals specifically with Process Capability and Organizational Maturity and it is the ISO/IEC 15504 series (in the process to be re-numbered into the 330xx series). These ISO standards defined it all, how to approach process capability and organizational maturity, how to define a process reference model – PRM (so that we have our point of comparison), and how to define a process assessment model – PAM (used to evaluate the capability of each single process included in our PRM. The series also goes into even more details guiding organizations regarding common language for the terms related to this domain as well on how to conduct these assessments, and the qualifications of the people conducting these.

This ISO series of standards in fact was used by TUDOR (Luxembourg research center, now called LIST) to develop TIPA, which is a method to assess the capability of ITIL modelled processes and also ISACA when they developed the capability assessment model for COBIT modelled processes. It is a great tool. This was developed by multiple experts covering multiple regions of the globe and multiple types of organizations. I personally consider that ITIL has a fairly good layout of the activities that should be present in a ‘process’ for improving and TIPA already has a robot tool that also cover this process. I would strongly recommend having a look at this series of standards and specifically at TIPA. I have been involved over the past 20 years on multiple assessments and audits and I can tell you all that there is value in what is included in this series of standard. I also teach auditing and how to conduct assessments since many years and the feedback gained from learners on this is all very positive.

Hi Robert, first of all thank you for sharing this. This is *very* good!

I wanted to hear your thoughts about the “definition” of CI. I think there is an implicit assumption in the maturity assessment questionnaire that the maturity assessor understands what CI is supposed to mean.

But imagine a group doing a self-assessment. A group may think they are doing CI, but really they are doing a bunch of change projects. (All projects assumedly have an intent to improve things, and hence the group will see this as their CI effort. Which I believe is not what CI stands for.) If that is the group’s understanding, they could carry out this (self) assessment without any question indicating that their understanding of CI is wrong. In effect, they may be doing a project management maturity assessment.

You actually highlight this difference between CI and projects under the Understanding heading in your article.

I wonder if another element to a definition of CI links in with your Cadence axis. Could you say that “CI” tends to be more project based when it is on the slow cadence end of the axis, and will be more “true” CI when it moves towards the fast cadence end of the axis? Maybe that’s a way of explaining the difference?

I am looking for a way to convey to a group what “true” CI is and how doing improvement projects is not actually what CI means. (without discarding the value of such projects – an organisation probably needs both) I am hoping that once they understand this difference, the approach that the group leadership thinks needs to be in place will change (for the better).

So really, the question for the Understanding axis is: what is it about CI that the group needs to understand to score higher on this axis?

Does that make sense?

Pieter, my intention for the Understanding axis was somewhat different from your interpretation. If I understand your view, you are considering that there is such a thing as “true continual improvement”, whereas everything else is “false continual improvement”. Thus, you want to measure the degree to which people have the “correct” understanding.

My purpose was rather to say that continual improvement requires some form of approach. The Understanding axis asks to what degree the people understand that approach. This allows for multiple approaches to CI, including approaches that might not yet be imagined today.

That being said, I do agree that there is something specific about continual improvement that makes it distinct from “just doing some changes”. For me, the essence of continual improvement is the engagement to make improvement a part of the regular, so-called “business as usual” activities. Thus, it contrasts sharply with a dichotomy of work into “run the business” and “change the business”.

One interest of a maturity model is how it allows people to be engaged with the concept while, at the same, doing a poor or moderate job of practicing the concept. We all have to start the adoption of a practice somewhere. It would be exceedingly demoralizing and discouraging if you were trying and learning, but being told, “Oh no. You call that continual improvement! Hardly!”

Thank you Robert for your response.

I understand what you mean and that makes a lot of sense.

Hi Robert,

I just came across the following definition on the ASQ website which probably answers my question.

“The terms continuous improvement and continual improvement are frequently used interchangeably. But some quality practitioners make the following distinction:

• Continual improvement: a broader term preferred by W. Edwards Deming to refer to general processes of improvement and encompassing “discontinuous” improvements—that is, many different approaches, covering different areas.

• Continuous improvement: a subset of continual improvement, with a more specific focus on linear, incremental improvement within an existing process. Some practitioners also associate continuous improvement more closely with techniques of statistical process control.”

Source: http://asq.org/learn-about-quality/continuous-improvement/overview/overview.html

I would be in the camp that finds that specific definition of “continuous improvement” to be rather arbitrary, or little use in practice and virtually impossible to communicate to the “average” employees in an organization. The issue of distinguishing continual from continuous is exacerbated when the organization does not speak English natively.